Detecting Fire Engines in Imagery

Finding fire trucks

One of the challenges in flying drones in public airspace is the need to peacefully coexist with everyone else. Emergency first responders like firefighters don't need quadcopters buzzing around, getting in their way.

Some consumer-level drones already have a "return to home" feature, where the drone automatically flies up to a safe height, returns to the spot it launched, and lands. This can happen when the battery is low, or the pilot hits a button.

What if we tried to automate returning to home when there is a fire engine nearby? For a first cut, we could simply try and detect if a fire engine is in the video feed from the drone. For our purposes here, we're not going to worry about where it is, if it's moving, how far away it is, what direction it's going, or anything like that. We're talking a pretty dumb autopilot, but if you're hanging around fires with your drone, maybe you need the help :)

So our decision process would be something like

See a fire engine? --> Land.

No fire engines in sight? --> Keep flying.

Image classification with ML would be a good place to start. Essentially, given a video frame or image, we want to classify whether the image has a fire truck in it. For training ML models, more data is usually better, but you don't need a ton to get started. I compiled a dataset of around 1,000 images with fire engines in them, and another 1,000 without. The fire engines came from the Imagenet dataset, and the non-fire engines from the Stanford cars dataset.

Keras is a machine learning toolkit that has some pre-built models vetted over large data sets. We could use InceptionV3, a neural net architecture from Google. Unfortunately, training on our 2k images doesn't give great results. After the net was trained, I picked a few images to measure predictions:

| Predicted | Actual | Confidence |

|---|---|---|

| no | yes | 52.6% |

| no | yes | 52.6% |

| no | yes | 51.6% |

| no | yes | 52.7% |

| no | yes | 52.2% |

| no | no | 52.3% |

| no | no | 51.6% |

| no | no | 52.3% |

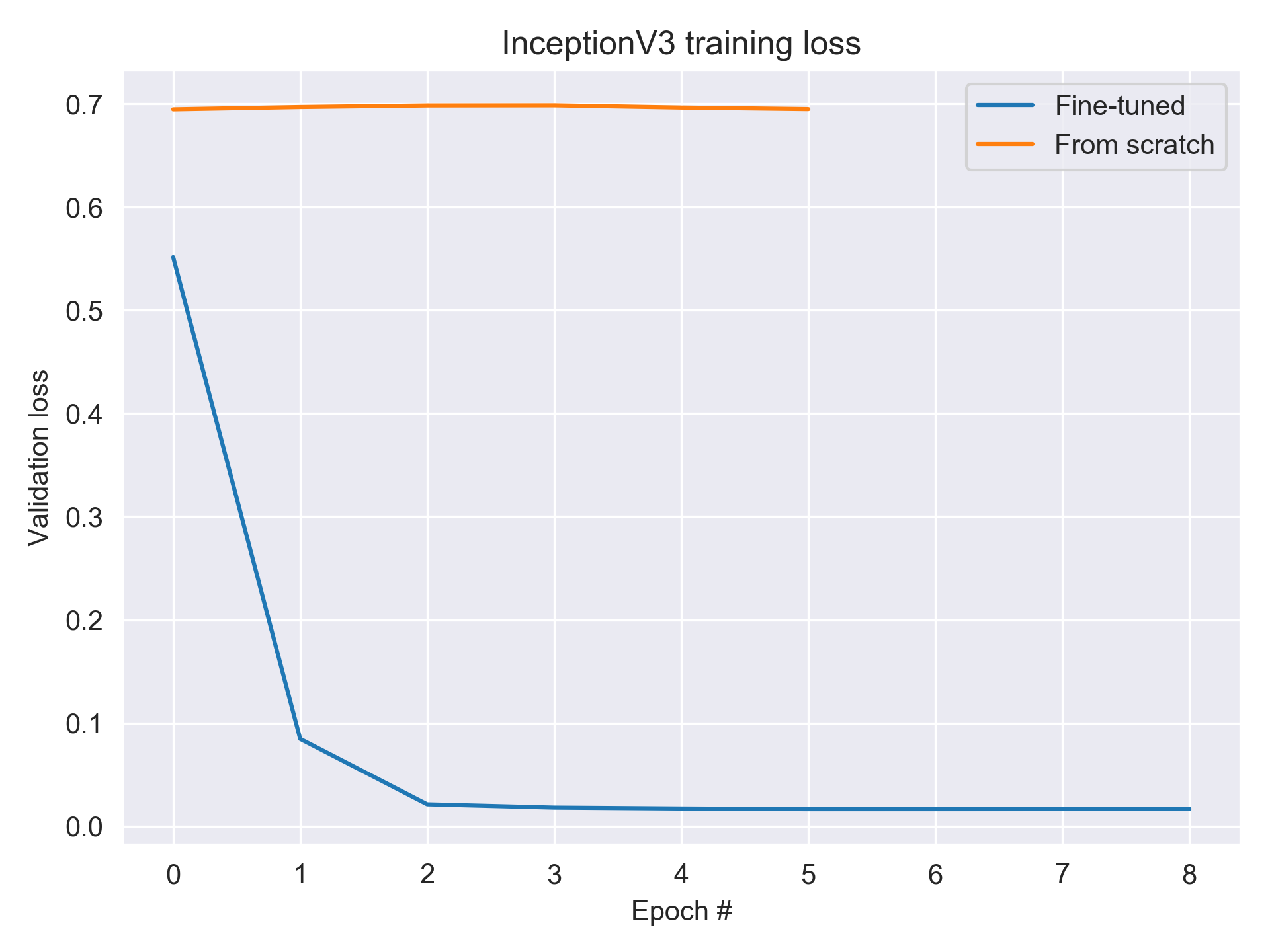

Not good, and not confident! 50% is the same as random guessing. The poor quality was kind of to be expected - the loss measured by my validation image set never got below ~0.7. I suspect more example images would be needed to push that down.

How can we improve on this? Keras can also supply models with pre-trained weights on 1,000 Imagenet object classes. One of them is fire engines. Using Keras, we can get these pre-trained weights.

It's common to measure image classifiers by top-1 or top-5 accuracy. That is, what's the hit rate that the actual class is somewhere in the top 5 classes the algorithm predicts.

So how does InceptionV3 fare with pre-trained weights? Not good! In a sample of 5 fire truck images, here's what the out-of-the-box pretrained model predicts:

| Rank | Confidence |

|---|---|

| #1 | 71.5% |

| #3 | 5.6% |

| Not in top 5 | |

| #3 | 1.1% |

| #1 | 18.8% |

This confidence metric is a little different - it's split across 1,000 object types. So if the net predicts fire truck with 71% confidence, the other 999 add up to the remaining 29%.

This still isn't good. We do have another trick up our sleeve though. Since we only care about two classes - fire truck and not-fire-truck, we can re-train the pre-trained model with our data set. This is transfer learning, and the particular version we're doing is called fine-tuning.

We're going to take the original neural net and original weights, add some new layers, and train just our new layers. Once our new layers are "warmed up", we can tune the whole net to squeeze the last drops out of the model.

How well does it work? Glad you asked! With another sample of 5 positives and 3 negatives, the fine-tuned net gets all 8 correct.

| Fire engine | confidence |

|---|---|

| yes | 99.4% |

| yes | 98.7 |

| yes | 85.0 |

| yes | 96.3 |

| yes | 91.6 |

| no | 94.4 |

| no | 79.8 |

| no | 84.3 |

On top of that, using the pre-trained weights decrased the training time per epoch from over 20 minutes to around 2 and a half. This was on an older desktop CPU as well - if you wanted to really juice the training speed, you could use a GPU for training.

Visualizing learning

You can plot the loss of the net over time, measured against a validation set of images, kept separate from the training set. Here's what that looked like for the nets I described here:

Next Steps

So we've found fine-tuning the pre-trained network weights for our specific goals improves accuracy dramatically. There's still a lot of room for improvement. Some ideas on how to make it better:

- Recognize other emergency vehicle types, like ambulances or police cars. Those are both classes in the Imagenet 1,000, so given a moderate training sample I would expect you could see similar accuracy improvements.

- Localize the vehicle in the image. This would let you determine relative positions of e.g. the drone and the emergency vehicle, and navigate safely away. This would mean using a different neural net architecture. R-CNN and family or YOLO would be a good place to start, especially YOLOv3.

- More efficient neural nets. There is always a balance between accuracy, speed, processing power, and memory usage for these applications. Typically the processors on drones are relatively low-powered, and time is somewhat critical, so you want to be as lean as possible. On the other hand, you want to actually detect emergency vehicles. These pre-trained nets are unlikely to be the most optimal for any given hardware configuration. I briefly tried training the same dataset with MobilenetV2, another pre-trained model in Keras, but the results were very poor - it only got 2/8 correct, with confidences all over the place to boot.

I trained on my desktop machine, using CPU only. All training sessions pinned my CPU. That's good - it should be using all my cores. For the from-scratch training, RAM usage peaked just under 16GB. For the fine-tuning, it peaked around 10GB. I didn't look into why the difference - my guess would be tensorflow has some smarts about what layers are frozen/being trained. Fine tuning was also around 10x faster per epoch.

The code I used to train the net is available on Github. Hope you found this useful!